I’m Luke Craven; this is another of my weekly explorations of how systems thinking and complexity can be used to drive real, transformative change in the public sector and beyond. The first issue explains what the newsletter is about; you can see all the issues here.

Hello, dear reader,

This week I have been very sick! So sick that I chose to spend my weekend looking at cute photos rather than writing a fresh issue of this newsletter. As a result, this week, here’s something from the back catalogue.

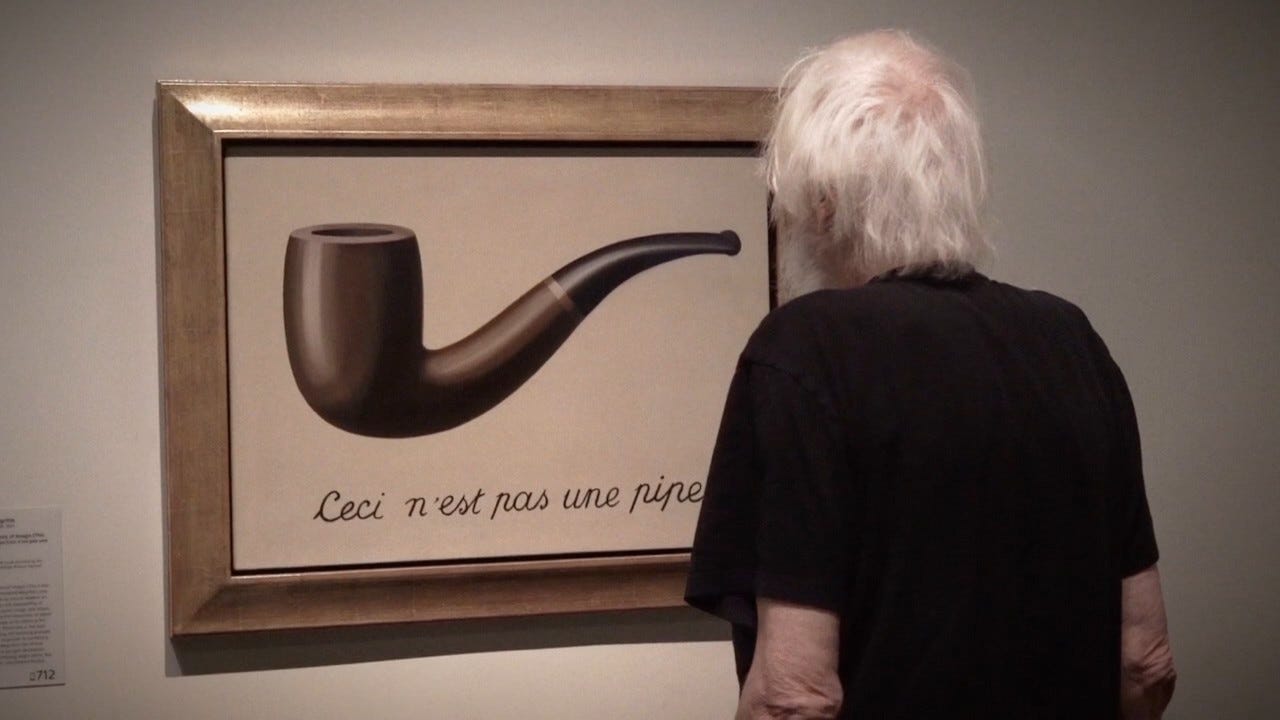

Images are treacherous, or so René Magritte would have us believe. The surrealist painter commonly played with the themes of perception, representation, and reality. In his famous work entitled The Treachery of Images, he depicted a drawing of a pipe with the caption, Ceci n'est pas une pipe ("This is not a pipe"). Of the painting he said, “if I had written on my picture "This is a pipe", I'd have been lying!”

The world of systems mapping is filled with this paradox. While a system map is often a useful representation of a context of phenomena, it is always fundamentally incomplete.

If you were to write on a map “This is the system”, you’d be lying.

For more experienced systems practitioners, this point may seem obvious, but it is easy to confuse a map with the system it depicts. This confusion arises because of a logical fallacy called reification or the fallacy of misplaced completeness. It arises when an abstraction—a system map or model—is treated as if it were the actual system under examination.

Understanding reification

There are several drivers of reification in the practice of systems mapping, each of which layers upon the others to limit our ability to distinguish the map from the system itself. Many of these traits are baked into our cognition but recognising them is the first step in strengthening our ability critically reflect on our system maps and their role in driving transformative change.

1. Premature closure and confirmation bias

Research has shown that people think about a situation only to the extent it is necessary to make sense—perhaps superficial sense—of it. When sense is achieved, people often feel no need to continue or to further iterate their understanding of a context or situation. In medicine this is referred to as premature closure or the tendency to stop too early in a diagnostic process, accepting a diagnosis before gathering all the necessary information or exploring all the important alternatives.

Systems mapping is often undertaken with limited resources, on tight-deadlines, and only ever captures the dynamics of the system at a point in time. But even when a system map is explicitly couched as illustrative or provisional, the temptation is to use it for diagnosis and to drive decisions about what could or should be done to change the system.

If this view of how system maps are used is correct, confirmation bias likely compounds the issue. Confirmation bias is the tendency of people to favour information that confirms or strengthens their beliefs or values and is difficult to dislodge once affirmed. Having arrived at a conclusion about the structure of the system, however prematurely, our brains are predisposed to seek evidence that supports our existing understanding and interpret new information in a way that aligns with our existing view.

Put simply, confirmation bias makes us overconfident that our system maps are an accurate depiction of reality, and to discount evidence that they are not.

2. Pattern recognition, Pareidolia and Type I errors

Our brains are pattern-detection machines that connect the dots, making it possible to uncover meaningful relationships among the barrage of sensory input we face. Pattern-recognition is crucial for human decision-making and survival, but we also make mistakes (i.e. seeing a pattern where none really exists) all the time. These mistakes are what statisticians would call a Type I error, also called a false positive.

Pareidolia, for example, is a form of pattern recognition involving the perception of images or sounds in random stimuli, such as seeing shapes in clouds, or seeing faces in inanimate objects or abstract patterns. Pareidolia happens when you convince yourself, or someone tries to convince you, that some data reveal a significant pattern when really the data are random or meaningless.

Some false positives, like seeing Jesus in a piece of toast, are largely harmless. Others, like believing a system map to be a high-fidelity capture of the system under examination are more problematic, particularly given our vulnerability to confirmation bias.

Take the human brain, for example. At one level, our maps of the brain are exceptionally accurate. We know what parts are where, how large they are, and what their purpose is. But in terms of really understanding all the functional relationships and the ways that the regions of the brain are communicating with each other, our understanding is limited. We don’t know whether regions are communicating through photonic or acoustic pathways, for example, but scientists infer, identify, and map patterns of communication regardless.

The mistake is believing a map to be a complete or accurate depiction of reality, which is why researchers typically conduct multiple studies to examine their research questions and continue to iterate different maps at different levels of detail and granularity. Having multiple maps and recognising that every map is incomplete helps avoid Type I errors and the reification of one view of a system over others.

3. System maps as silver bullets

This raises yet another paradox: if having multiple maps helps us build a more accurate picture of the actual situation or phenomenon under investigation, why do so many systems projects produce a single map?

There are countless examples. From the Foresight Obesity Map to single outputs of group model building exercises the message is the same: you only need one map to understand the dynamics of this system. This message plays into our cognitive biases and contributes to reification of certain view of the world.

This drive towards one map per project or problem is possibly driven by our desire for dominant monocausal explanations. Humans are energy-minimising cognitive systems, and probably for good evolutionary reasons. Monocausal explanations require less brain power to execute than complex explanations, even though that biases us towards solutions that look or feel as if they are single silver bullet.

We don’t recognise this bias in the practice of systems mapping, but producing one map for a particular system is the same prescribing one treatment for a particular illness.

In both cases, there is no silver bullet. Multicausal explanations are necessary if we are going to properly engage with and map complex systems, even if using them is not our default cognitive setting.

Overcoming our biases

Almost every moment throughout the day, we are making decisions. However, most of us are unaware of the thoughts, buried beliefs, prejudices and biases that influence our decisions, and therefore, most people are unconscious of how they impact our decisions.

Systems practitioners are probably better than most at being aware of the more common cognitive biases, but ultimately, we are all human and our brains are very good at playing tricks on us. The world of systems mapping is not immune to the challenges of confirmation bias, nor to our desire to understand the world through monocausal goggles.

While many biases are universal, the way they impact different areas of practice is divergent and unpredictable ways. Identifying these biases and their impact on how we map the systems around us will help to bring more consciousness and humility into the work of systems practice.

By the way: This newsletter is hard to categorise and probably not for everyone—but if you know unconventional thinkers who might enjoy it, please share it with them.

Find me elsewhere on the web at www.lukecraven.com, on Twitter @LukeCraven, on LinkedIn here, or by email at <luke.k.craven@gmail.com>.

Great discussion. A good analysis and why I find it futile to build a system map. Shortly after I build it, it seems to become obsolete. On the other hand, I could argue, why make my bed up, I'm just going to sleep in it again 12 hours from now anyway. There's something about staying in space between chaos and order, where we aren't totally in one or another, that makes us want to keep organizing. I happen to also like unrumpled sheets at the end of a long day, so I make my bed in the morning. I'm not yet convinced about systems maps, and I was a practicing systems engineer from 1982 to 1990.

Is this a comment or a representation of a comment?